Mono Vs Stereo audio is a never-ending debate among music enthusiasts. When you start your career in the audio recording field, it’s one of the most confusing subjects that should you use mono track or stereo track to record?

All the DAWs are equipped with the feature to record Mono and stereo tracks. It makes it more confusing for you to decide which one should you use in your project.

Suppose you are an experienced recording engineer or a producer. In that case, you can leave here because here I’m going to discuss all the basic things about mono and stereo audio that I think every experienced recording engineer would know.

BUT, if you are just starting your recording career, then this article is for you.

So, assuming you are a newbie, let’s start the discussion.

First,

Table of Contents

- 1 Mono Audio

- 2 Stereo Audio

- 3 Why Stereo is Better Then Mono?

- 4 The stereo field

- 5 Stereo Imaging

- 6 Application of Stereo Audio

- 7 LCR Panning

- 8 Recording vocals: Mono or Stereo Which one is better?

- 9 Recording Other Instruments: Mono or Stereo?

- 10 A/B Technique Recording in Stereo

- 11 Mono to Stereo Conversion Methods and Techniques

- 12 Dynamics: Is stereo louder than mono?

- 13 Bottom Line: Mono Vs Stereo

Mono Audio

The full form of Mono is “Monophonic”, which translates into “one sound”.

We can elaborate this as; Mono = One and Phonē (Greek word) – Phonic (English) = Sound.

Disclosure: This post may contain affiliate links, which means we may receive a commission if you click a link and purchase something that we recommended. Read more about Affiliate disclosure here.

Any sound that comes from a natural sound source and does not mix up with any effect such as delay, reverb, etc is a mono sound—for example, guitar, human voice, etc.

Actually, every sound in nature is a mono sound, but we have two ears, and so we can manipulate those sounds by splitting them into stereo tracks and adding several types of effects to make them more sounding.

When we record this sound with a single microphone, then it’s called mono recording. We use Mono tracks to record mono sound in DAWs and sound recorders.

If you record multiple sound sources with one mic, it’s still a mono recording because you are recording with a single microphone and recording on a mono track.

Mono recording was most common in the early stages of audio recording technology. But today, what we listen to is stereo sound manipulated to make the music sound better.

So when a sound is recorded through one channel in a recording device, then it’s called mono audio.

Stereo Audio

The full form of Stereo is stereophonic, which means full sound.

We can elaborate this as; (Greek word) – Stereo (English) = Full and Phonē (Greek) – Phonic (English) = Sound.

When a sound is recorded with two mics and the signals are sent to two separate channels, this is called stereo recording. Here you can see that we are recording the same mono sound with two different mics or recording devices.

So, the sound is mono in nature, but we are manipulating this sound to make it stereo. As we have two ears, engineers use this feature to make the mono sound better and dramatic.

All the sound systems are stereo today, whether our phones, laptop speakers, headphones, movie theatres, video games, or PA systems. So it’s essential to convert mono audio to stereo to support all the stereo systems.

Stereo audio is of two types; rue Stereo and Pseudo Stereo.

- True Stereo: When a sound is recorded with two microphones or recording devices, it’s called true stereo.

- Pseudo Stereo: This is a virtual stereo that can achieve by converting the mono sound, which is recorded with one microphone, to stereo inside the system. Almost all the sound systems today support Pseudo Stereo. These systems convert mono sound into stereo for playback in stereo mode.

BUT, the question is,

Why Stereo is Better Then Mono?

Our mind observes much more than we think about. In a real environment, sound comes from all directions. Our ears capture those sounds, and the best thing is that our brain can tell us the direction of sound sources by differentiating between them.

When a sound comes to our ears, one ear captures the sound first and the other a bit later. This is all due to the different distances and positions of ears towards the sound source. Our brain uses this information to decide the direction and distance of the sound source.

Also, when a sound is produced, its bounces through all the obstacles such as walls and objects in the room and mixed with the original sound that adds some reverberations, delays, etc effect to the resulting sound. These effects feel us like you are in the middle of the sounding environment.

Suppose a player plays the guitar in a room. Here the sound you listen to is not the original sound emits from the sound source but a mixture of the original sound and the effects caused by the environment.

But when the same guitar sound is recorded with a single microphone and in a mono track, you will get dry audio without any effects.

That’s because a mic acts as a single ear and does not add the stereo effect that our two ears add.

But, when people started recording the same sound with multiple microphones and adding virtual effects to the tracks, they realized that it’s possible to create the same environment as a natural one.

Our brain treats this stereo audio like a natural sound and makes us feel more natural with it than mono audio. Also, it’s possible with stereo audio to place different instruments in different places to create a virtual music environment.

That’s why stereo audio is better than mono audio.

The stereo field

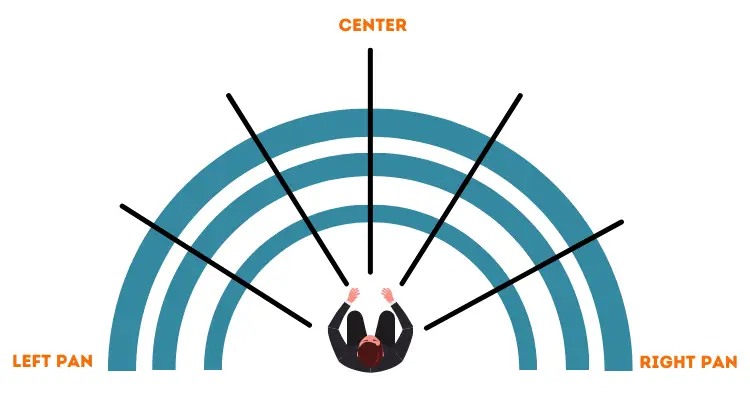

The stereo field consists of space covering the left to right and front to the back of your body to the sound sources.

Our brain is capable of catching the placement of sound sources around the body. When you close your eyes during a concert, you can easily recognize the actual placement of the instruments on the stage.

When you put all the sounds together, it is called the stereo field.

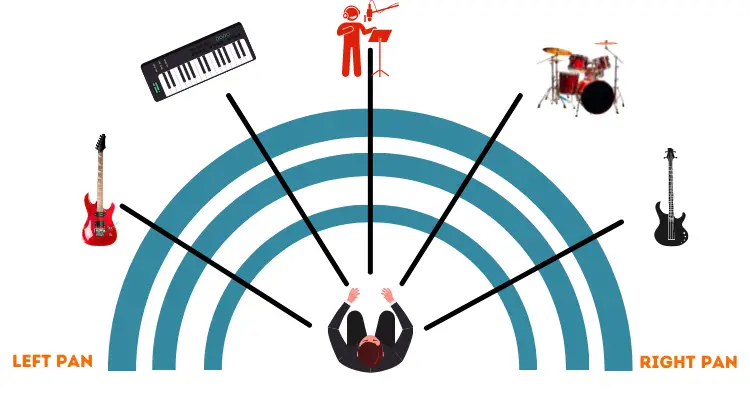

You can use the stereo field to place the instruments the same as in concerts in audio recording and mixing. You can pan one instrument on the left side, the other on the right side, and another on the front.

Also, Reverb and delay effects are used to add the reflections that you feel in concerts when the mix of the original sound and reflected sound from walls enter into your ears.

In the audio mixing, the stereo field is used to fool our minds to assume that we are inside the concert hall. As natural as you can use the effects to create the virtual stereo field, as pleasant as it would be sounding.

Creating a natural stereo field is an important part of mixing.

We cannot create a stereo field on a mono track. However, we can add effects and pan a mono track to left and right but we have to export the master audio in the stereo track to enjoy the stereo field in music.

Stereo Imaging

Manipulating the 180-degree stereo field by panning, adding effects, using volume faders, etc, on the instruments and vocals and put them in different places is called stereo imaging.

However, the stereo image is consists of a 180-degree stereo field; we can differentiate it into two types of stereo fields as per the effects used; a 90-degree stereo image and a 180-degree stereo image.

When you use only the pan feature of your DAW to place the instruments in different positions in the stereo field, you are manipulating the 90-degree stereo image.

But when you use effects like delay and phase cancellation and separate a mono signal into two signals (stereo track) and then delay one of them slightly, this is phase cancellation.

The phase cancellation widens the signal into different parts of the full 180-degree stereo image.

You can also use the volume faders to increase and decrease the amplitude of the sounds so that the human brain can differentiate them as per the distance from the ears.

This whole technique is called stereo imaging.

I have a detailed guide on Stereo imaging which you should learn to know more about it.

Application of Stereo Audio

Before introducing stereo recording, mono audio is used in various sounding devices such as gramophones and mono cassette players.

But when scientists discover the stereo field and recordists started stereo recording, the door to the infinite applications of stereo imaging has opened.

Today, almost all listening devices such as mobile phones, TV, radio, music players, computers, etc., come with the stereo sound feature.

So, stereo audio is widely used in the world. Recordists are now using stereo imaging in music albums, movie scores, and gaming to give them a more realistic environment.

Today there are several versions of stereo imaging in use including, LCR stereo, Dolby, Surround, Dolby Atmos, etc. But for LCR, stereo is the most common because it adapts to various devices from mobile phones to car audio.

LCR Panning

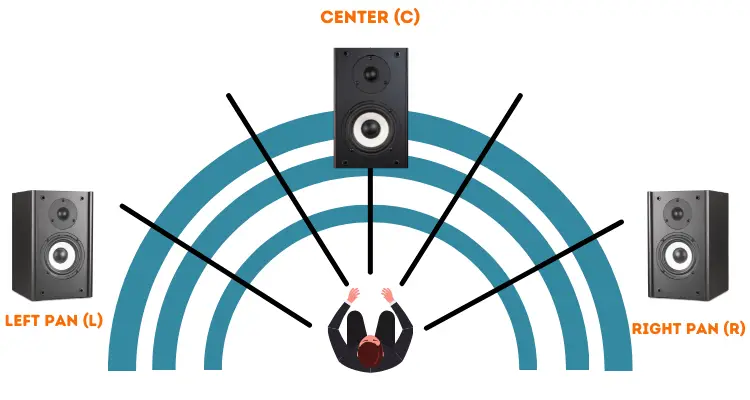

The full form of LCR is Left, Center and Right. It’s a panning technique used in audio mixers to put the sound in a particular place in the stereo field.

All the sound mixers, whether an analog mixer or your DAWs digital mixer, come with LCR panpots. Each track in the mixer has separate panpots, and the stereo out of the mixer also has a master panpot.

With these panpots, you can easily give a particular place to a sound in the stereo field.

Mix engineers have been using LCR panning for decades, and due to its reliability, it’s still the most popular method of stereo mixing.

When you are using LCR in mixing, that means you are playing between three main areas of the stereo field, i.e., 90 degrees left, 0-degree centre, and 90 degrees right.

You will put the sounds between 180 degrees stereo field.

The output comes from two or three speakers, i.e., Left, centre, and right speakers. We can skip the centre speaker if we are bouncing a stereo track. The central sound will come from both speakers with the same amplitude. So it will seem like it’s coming from the centre.

LCR panning works on the amplitude difference of the sound from one side to another side.

The panpot allows you to decrease the amplitude of one side of the stereo sound while increasing the amplitude of the other side, which makes us feel that the sound is coming from a particular place in the 180-degree stereo field.

BUT, LCR panning doesn’t work if you want to set up the sound behind the listener. Dolby 5.1 or 7.1 works great for that purpose.

Well, music is generally mixed in LCR format because most music players play music in the same format.

However, in the last few years, 5.1 and 7.1 are acquiring more space in the music industry; even the most used music devices, i.e., mobile phones, are using LCR. The reason is earphones which have only two output speakers.

BUT, these days, we also see mobile devices with virtual Dolby sound technology, which can create a virtual 360-degree stereo field with the same 2-speakers earphones.

Recording vocals: Mono or Stereo Which one is better?

Whenever we talk about Mono and Stereo, one thought that comes to mind is should we record vocals in mono or stereo? Which is better?

Actually, it’s always been the subject of debate among music enthusiasts.

For me, it depends on the needs of the mix engineer. If he wants to create special effects for vocals, then recording vocals in stereo is worth it. Otherwise, I suggest always go with mono songs.

See, when we record the same vocal in two different mono tracks and combine them in mixing, it creates phase cancellation issues, and you could listen to choppy vocals.

You can record the same verse two times and use it as a chorus effect between the music. It all depends on you.

When you add stereo EFX (stereo delay, reverb, etc.) in the mono vocal tracks, the signal converts into a stereo signal, so keep in mind that you should always bounce a stereo track; otherwise, you will lose the effects on the bounced track.

Recording Other Instruments: Mono or Stereo?

Phase cancellation issue also arises when you record other acoustic instruments. However, there are stereo miking techniques that minimize the phase issue to an extent.

If you are recording drums, acoustic guitar, or any other instrument that needs a mic or has a mono output, you should record in a mono track. After the recording, you can manipulate the stereo field of the sound to create stereo effects on it.

In drums, we need several mics to record all the components, so you should use separate mono tracks for all the mics and combine them later in the mix as per your need.

You can record guitars, pianos, etc., in a stereo track with different stereo miking techniques. After recording, you can use the sound to put the guitar in a particular place.

For instruments with mixed harmonics such as violin, Saxophone you should record in mono track; otherwise, you would get phase cancellation issues resulting in a choppy sound like stereo vocals.

A/B Technique Recording in Stereo

When it comes to stereo recording, the A/B recording technique is very popular. It’s also called the “Spaced Pair” recording technique. You need two microphones to record with this technique.

These microphones are positioned from 3 to 10 feet apart from the sound source and capture the same sound from different positions.

Sound comes to both the microphones travels for different times due to the distance from the source. This will create phase and amplitude differences in the input. This method produces a more clear effect when we use mics with higher proximity effects.

Our brain is very good at analyzing the phase and amplitude differences. When this stereo sound comes into the human ear, it recreates the location; thus, you will get a very clear stereo effect.

For example, let’s assume we place both the microphones 50 CMs apart to capture a source, creating a time difference(phase difference) of 1.2 to 1.5 milliseconds.

After recording, we pan both the tracks hard left and right, respectively. If you don’t pan the tracks you will get phasing in the output causing the most extreme phasing effect.

After panning to the right and left, respectively, you will get a pleasant stereo effect in the output. That’s how the A/B miking technique works.

Mono to Stereo Conversion Methods and Techniques

When we record on a mono track, we have two options to output the audio—routing the sound through a mono output channel and routing through the stereo master output.

When we route through stereo master output, the resultant sound is converted to stereo format, but it’s not a stereo sound; it’s just a stereo format.

The actual process of converting mono sound into stereo sound is a combination of different methods and techniques such as adding reverb and delay effects, phase manipulation by stereo widening effects, etc.

Using stereo reverbs and delays is a good way to convert any mono sound into stereo sound. With these effects, you add a virtual stereo environment in the sound, which plays with the human brain to make it feel like it’s listening to stereo audio.

Every DAW (digital audio workstation) comes with several stereo features, including stereo reverb, delay, and stereo widening effects. So, no worry about making any mono sound into stereo sound.

Dynamics: Is stereo louder than mono?

No, a true stereo signal is not louder than mono. However, if you are routing the same mono audio to stereo output, you will obviously get louder sound due to the presence of two speakers.

But, when you record and process true stereo audio as the same level of mono audio, then stereo audio is not louder than mono one, disregarding any phase cancellation issue.

It’s just like stereo signals are routing through two different speakers and coming to your ears from different directions.

So, the stereo signal is not louder than the mono signal as far as there is no phase cancellation issue arises.

Bottom Line: Mono Vs Stereo

Here you’ve got all the basic information about stereo and mono signals. Newbies are always confused between mono and stereo.

Well, I think after reading this article you have got all the answers arising in your mind about the stereo and mono sounds and the applications of both in audio recording.

If you have any questions regarding this topic, feel free to drops in the comment box below. I would be happy to answer all your all questions.

And don’t forget to share this article with others on social media. This will help them to understand the basic theory of stereo and mono audio. Sharing also encourages me to write more valuable content for you.

You may also like,

![What is Microphone Gain [Complete Guide About Microphone Gain]](https://www.recordingbase.com/wp-content/uploads/2022/01/microphone-gain.png)

Learn So Much From Reading Your Post. Im Also Taking Notes.